Main question

Can we use bankruptcy models to predict supplier bankruptcies?

But first:

Does the Altman Z-score [still] pick up bankruptcy?

Dr. Richard M. Crowley

logodds(Double~sales) = -3.44 + 0.54 Holiday

logodds(Double~sales) = -3.44 + 0.54 Holiday

exp(0.54) = 1.72

1.72 / (1 + 1.72) = 0.63

type="response" to get probabilitiestest_data <- as.data.frame(IsHoliday = c(0,1))

predict(model, test_data) # log odds## [1] -3.44 -2.90predict(model, test_data, type="response") #probabilities## [1] 0.03106848 0.05215356These are a lot easier to interpret

Z = 1.2 x_1 + 1.4 x_2 + 3.3 x_3 + 0.6 x_4 + 0.999 x_5

This looks like a linear regression without a constant

More about this, from Altman himself in 2000: rmc.link/420class6

Can we use bankruptcy models to predict supplier bankruptcies?

But first:

Does the Altman Z-score [still] pick up bankruptcy?

Is this a forecasting or forensics question?

dlsrn == 2, then the firm went bankruptdldte# initial cleaning

df <- df %>% filter(at >= 1, revt >= 1, gvkey != 100338)

## Merge in stock value

df$date <- as.Date(df$datadate)

df_mve$date <- as.Date(df_mve$datadate)

df_mve <- df_mve %>% rename(gvkey=GVKEY)

df_mve$MVE <- df_mve$csho * df_mve$prcc_f

df <- left_join(df, df_mve[,c("gvkey","date","MVE")])## Joining, by = c("gvkey", "date")df <- df %>%

group_by(gvkey) %>%

mutate(bankrupt = ifelse(row_number() == n() & dlrsn == 2 &

!is.na(dlrsn), 1, 0)) %>%

ungroup()row_number() gives the current row within the group, with the first row as 1, next as 2, etc.n() gives the number of rows in the group# Calculate the measures needed

df <- df %>%

mutate(wcap_at = wcap / at, # x1

re_at = re / at, # x2

ebit_at = ebit / at, # x3

mve_lt = MVE / lt, # x4

revt_at = revt / at) # x5

# cleanup

df <- df %>%

mutate_if(is.numeric, funs(replace(., !is.finite(.), NA)))

# Calculate the score

df <- df %>%

mutate(Z = 1.2 * wcap_at + 1.4 * re_at + 3.3 * ebit_at + 0.6 * mve_lt +

0.999 * revt_at)

# Calculate date info for merging

df$date <- as.Date(df$datadate)

df$year <- year(df$date)

df$month <- month(df$date)We’ll check our Z-score against credit rating as a simple validation

# df_ratings has ratings data in it

# Ratings, in order from worst to best

ratings <- c("D", "C", "CC", "CCC-", "CCC","CCC+", "B-", "B", "B+", "BB-",

"BB", "BB+", "BBB-", "BBB", "BBB+", "A-", "A", "A+", "AA-", "AA",

"AA+", "AAA-", "AAA", "AAA+")

# Convert string ratings (splticrm) to numeric ratings

df_ratings$rating <- factor(df_ratings$splticrm, levels=ratings, ordered=T)

df_ratings$date <- as.Date(df_ratings$datadate)

df_ratings$year <- year(df_ratings$date)

df_ratings$month <- month(df_ratings$date)

# Merge together data

df <- left_join(df, df_ratings[,c("gvkey", "year", "month", "rating")])## Joining, by = c("gvkey", "year", "month")df %>%

filter(!is.na(Z),

!is.na(bankrupt)) %>%

group_by(bankrupt) %>%

mutate(mean_Z=mean(Z,na.rm=T)) %>%

slice(1) %>%

ungroup() %>%

select(bankrupt, mean_Z) %>%

html_df()| bankrupt | mean_Z |

|---|---|

| 0 | 3.939223 |

| 1 | 0.927843 |

df %>%

filter(!is.na(Z),

!is.na(bankrupt),

year >= 2000) %>%

group_by(bankrupt) %>%

mutate(mean_Z=mean(Z,na.rm=T)) %>%

slice(1) %>%

ungroup() %>%

select(bankrupt, mean_Z) %>%

html_df()| bankrupt | mean_Z |

|---|---|

| 0 | 3.822281 |

| 1 | 1.417683 |

fit_Z <- glm(bankrupt ~ Z, data=df, family=binomial)

summary(fit_Z)##

## Call:

## glm(formula = bankrupt ~ Z, family = binomial, data = df)

##

## Deviance Residuals:

## Min 1Q Median 3Q Max

## -1.8297 -0.0676 -0.0654 -0.0624 3.7794

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -5.94354 0.11829 -50.245 < 2e-16 ***

## Z -0.06383 0.01239 -5.151 2.59e-07 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 1085.2 on 35296 degrees of freedom

## Residual deviance: 1066.5 on 35295 degrees of freedom

## (15577 observations deleted due to missingness)

## AIC: 1070.5

##

## Number of Fisher Scoring iterations: 9Examples:

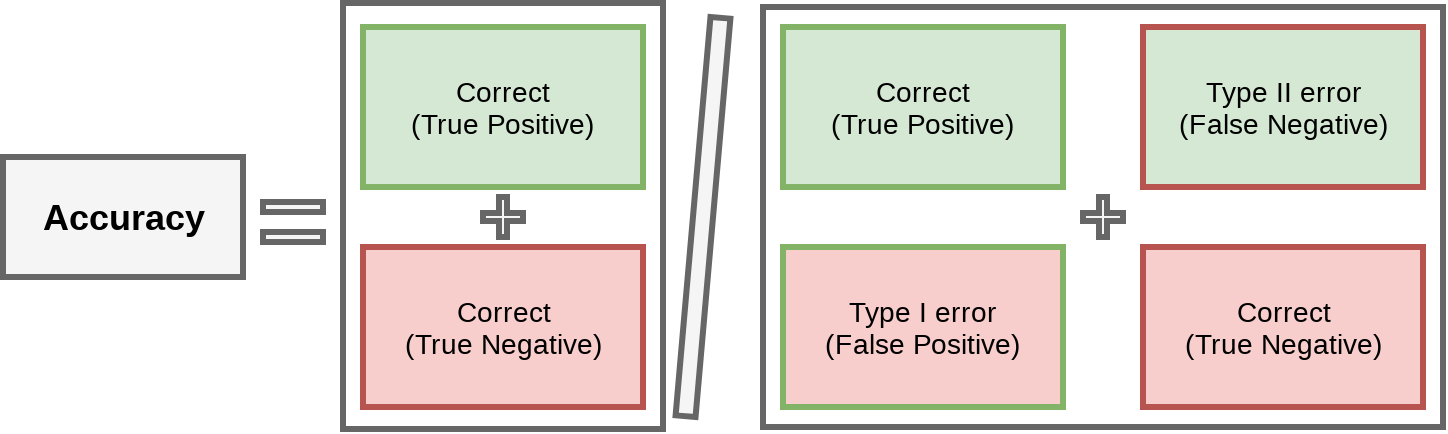

Correct 92.0% of the time using Z < 1 as a cutoff

Correct 99.7% of the time if we say firms never go bankrupt…

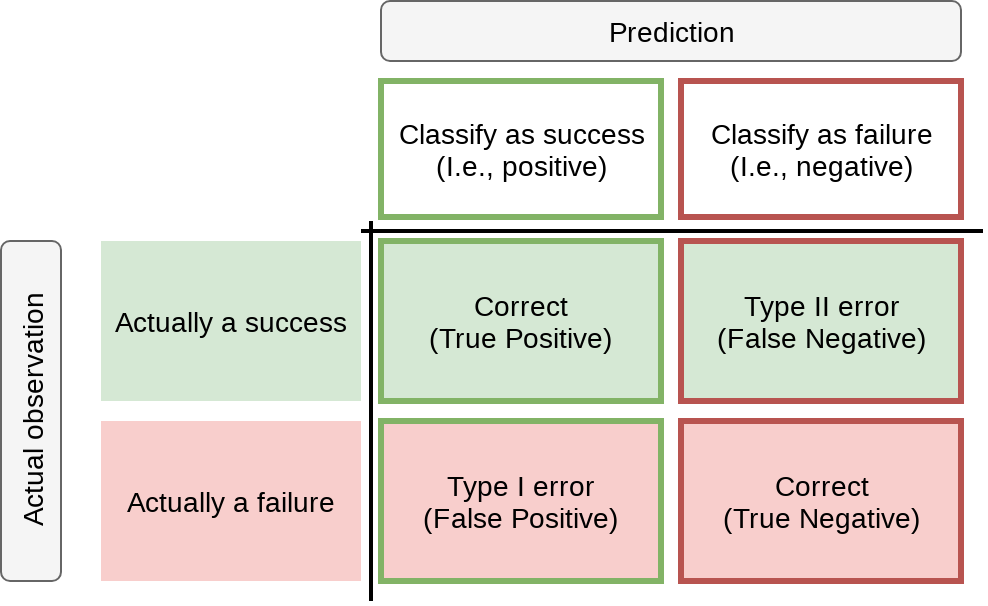

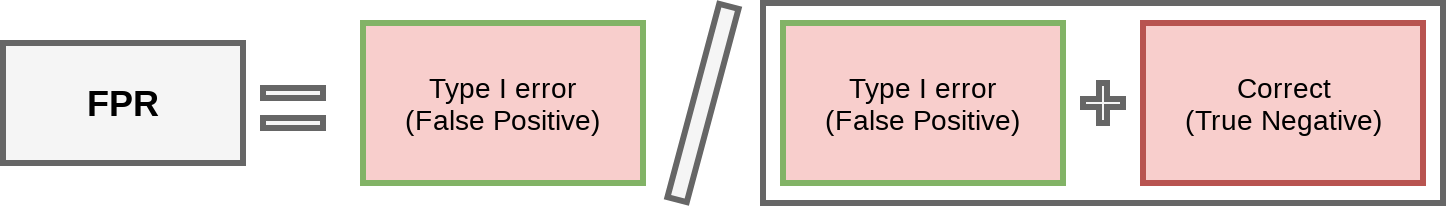

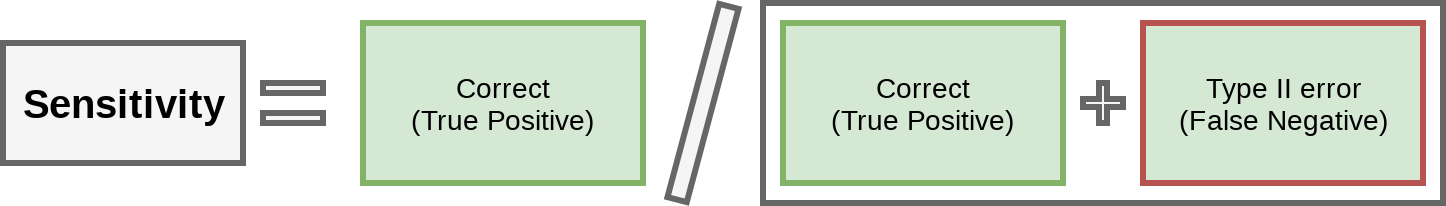

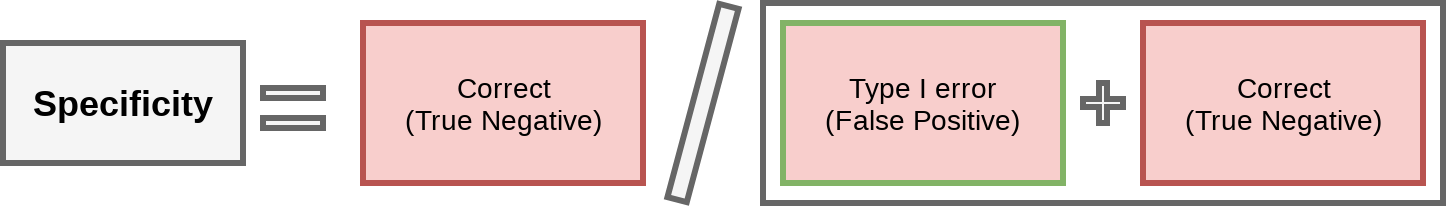

This type of chart (filled in) is called a Confusion matrix

We say that the company will go bankrupt, but they don’t

We say that the company will not go bankrupt, yet they do

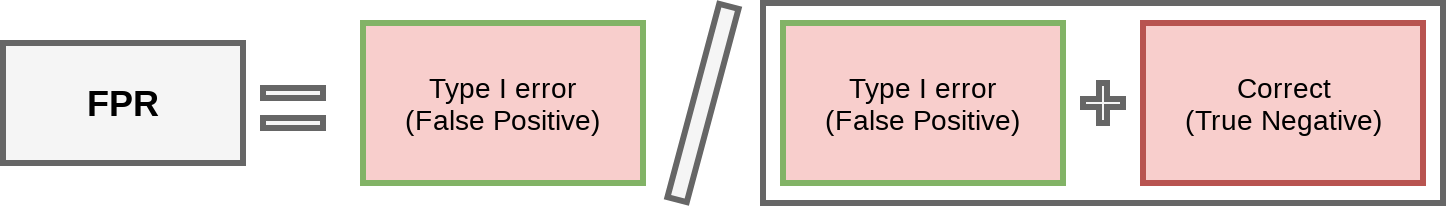

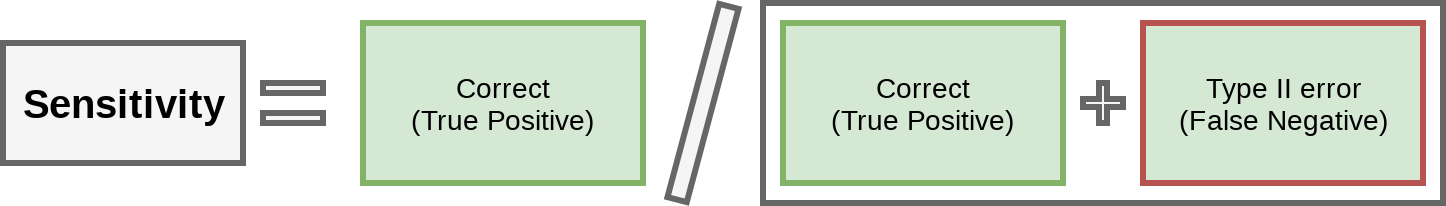

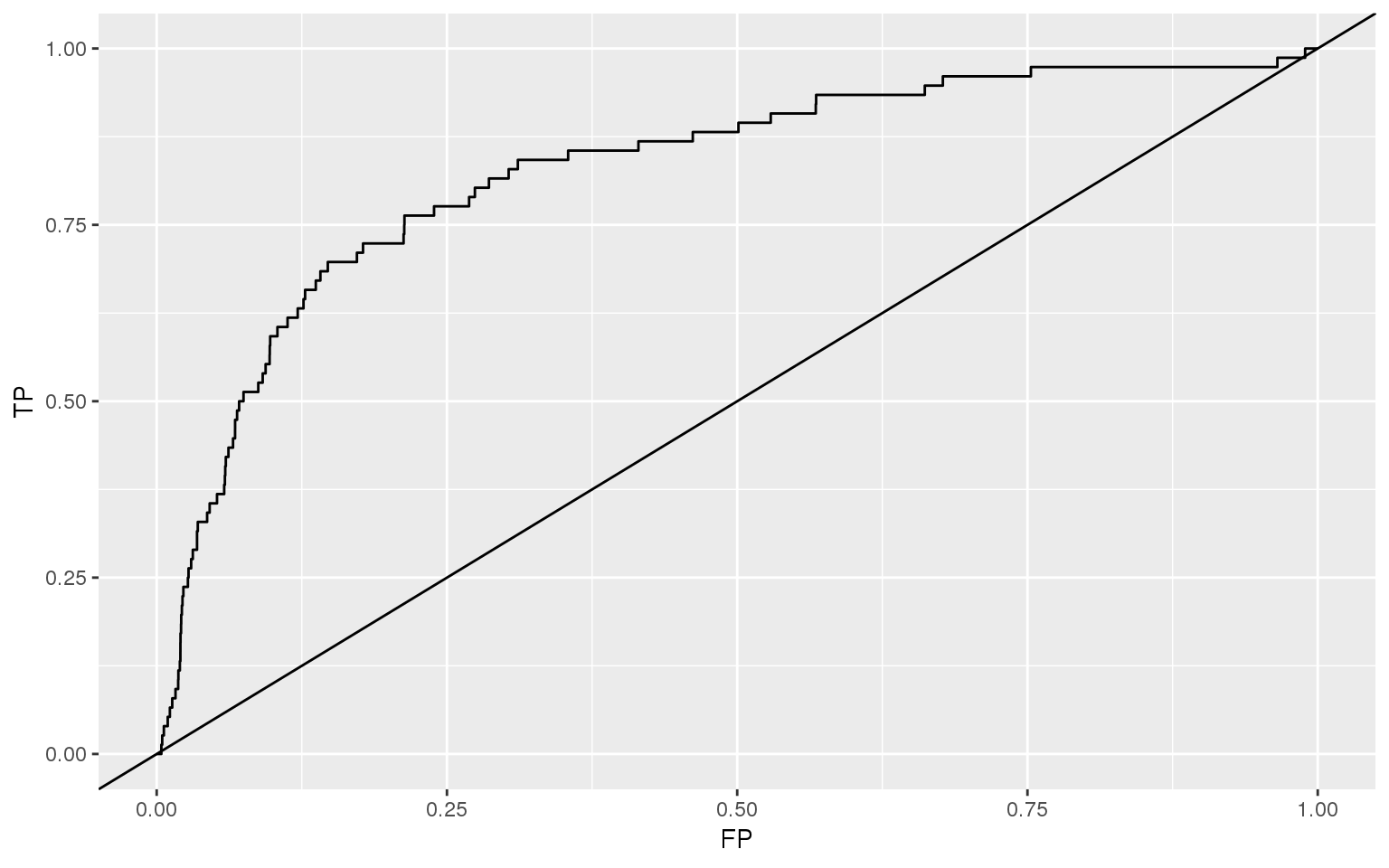

library(ROCR)

pred_Z <- predict(fit_Z, df, type="response")

ROCpred_Z <- prediction(as.numeric(pred_Z), as.numeric(df$bankrupt))

ROCperf_Z <- performance(ROCpred_Z, 'tpr','fpr')'tpr' is true positive rate'fpr' is false positive ratedf_ROC_Z <- data.frame(

FP=c(ROCperf_Z@x.values[[1]]),

TP=c(ROCperf_Z@y.values[[1]]))

ggplot(data=df_ROC_Z,

aes(x=FP, y=TP)) + geom_line() +

geom_abline(slope=1)

plot(ROCperf_Z)

auc_Z <- performance(ROCpred_Z, measure = "auc")

auc_Z@y.values[[1]]## [1] 0.8280943@ to pull out values, not $

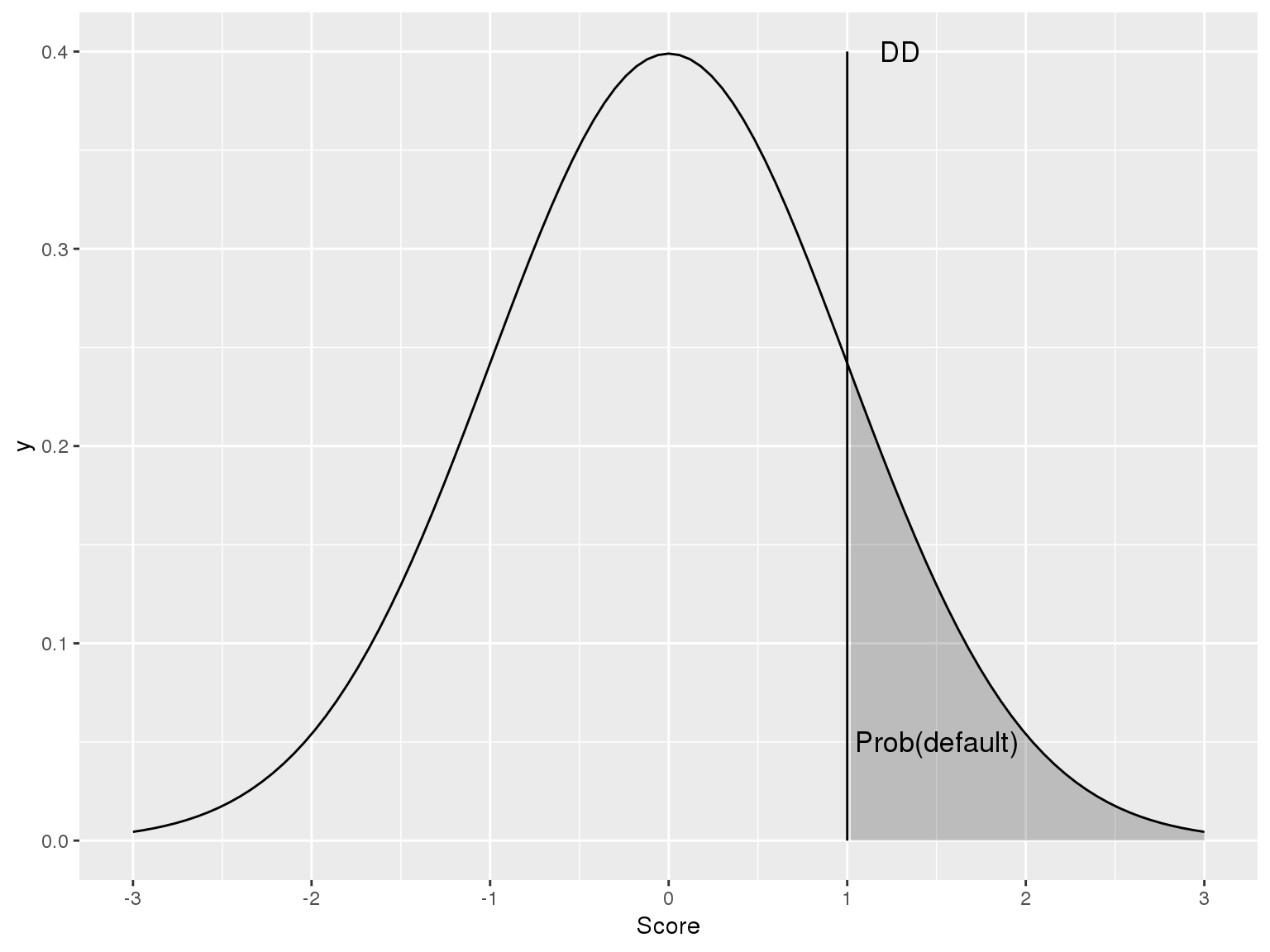

DD = \frac{\log(V_A / D) + (r-\frac{1}{2}\sigma_A^2)(T-t)}{\sigma_A \sqrt(T-t)}

# df_stock is an already prepped csv from CRSP data

df_stock$date <- as.Date(df_stock$date)

df <- left_join(df, df_stock[,c("gvkey", "date", "ret", "ret.sd")])## Joining, by = c("gvkey", "date")df_rf$date <- as.Date(df_rf$dateff)

df_rf$year <- year(df_rf$date)

df_rf$month <- month(df_rf$date)

df <- left_join(df, df_rf[,c("year", "month", "rf")])## Joining, by = c("year", "month")df <- df %>%

mutate(DD = (log(MVE / lt) + (rf - (ret.sd*sqrt(252))^2 / 2)) /

(ret.sd*sqrt(252)))

# Clean the measure

df <- df %>%

mutate_if(is.numeric, funs(replace(., !is.finite(.), NA)))ret.sd is daily return standard deviation

df %>%

filter(!is.na(DD),

!is.na(bankrupt)) %>%

group_by(bankrupt) %>%

mutate(mean_DD=mean(DD, na.rm=T),

prob_default =

pnorm(-1 * mean_DD)) %>%

slice(1) %>%

ungroup() %>%

select(bankrupt, mean_DD,

prob_default) %>%

html_df()| bankrupt | mean_DD | prob_default |

|---|---|---|

| 0 | 0.612414 | 0.2701319 |

| 1 | -2.447382 | 0.9928051 |

df %>%

filter(!is.na(DD),

!is.na(bankrupt),

year >= 2000) %>%

group_by(bankrupt) %>%

mutate(mean_DD=mean(DD, na.rm=T),

prob_default =

pnorm(-1 * mean_DD)) %>%

slice(1) %>%

ungroup() %>%

select(bankrupt, mean_DD,

prob_default) %>%

html_df()| bankrupt | mean_DD | prob_default |

|---|---|---|

| 0 | 0.8411654 | 0.2001276 |

| 1 | -4.3076039 | 0.9999917 |

fit_DD <- glm(bankrupt ~ DD, data=df, family=binomial)## Warning: glm.fit: fitted probabilities numerically 0 or 1 occurredsummary(fit_DD)##

## Call:

## glm(formula = bankrupt ~ DD, family = binomial, data = df)

##

## Deviance Residuals:

## Min 1Q Median 3Q Max

## -2.9848 -0.0750 -0.0634 -0.0506 3.6506

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -6.16401 0.15323 -40.23 < 2e-16 ***

## DD -0.24451 0.03773 -6.48 9.14e-11 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 718.67 on 21563 degrees of freedom

## Residual deviance: 677.18 on 21562 degrees of freedom

## (33618 observations deleted due to missingness)

## AIC: 681.18

##

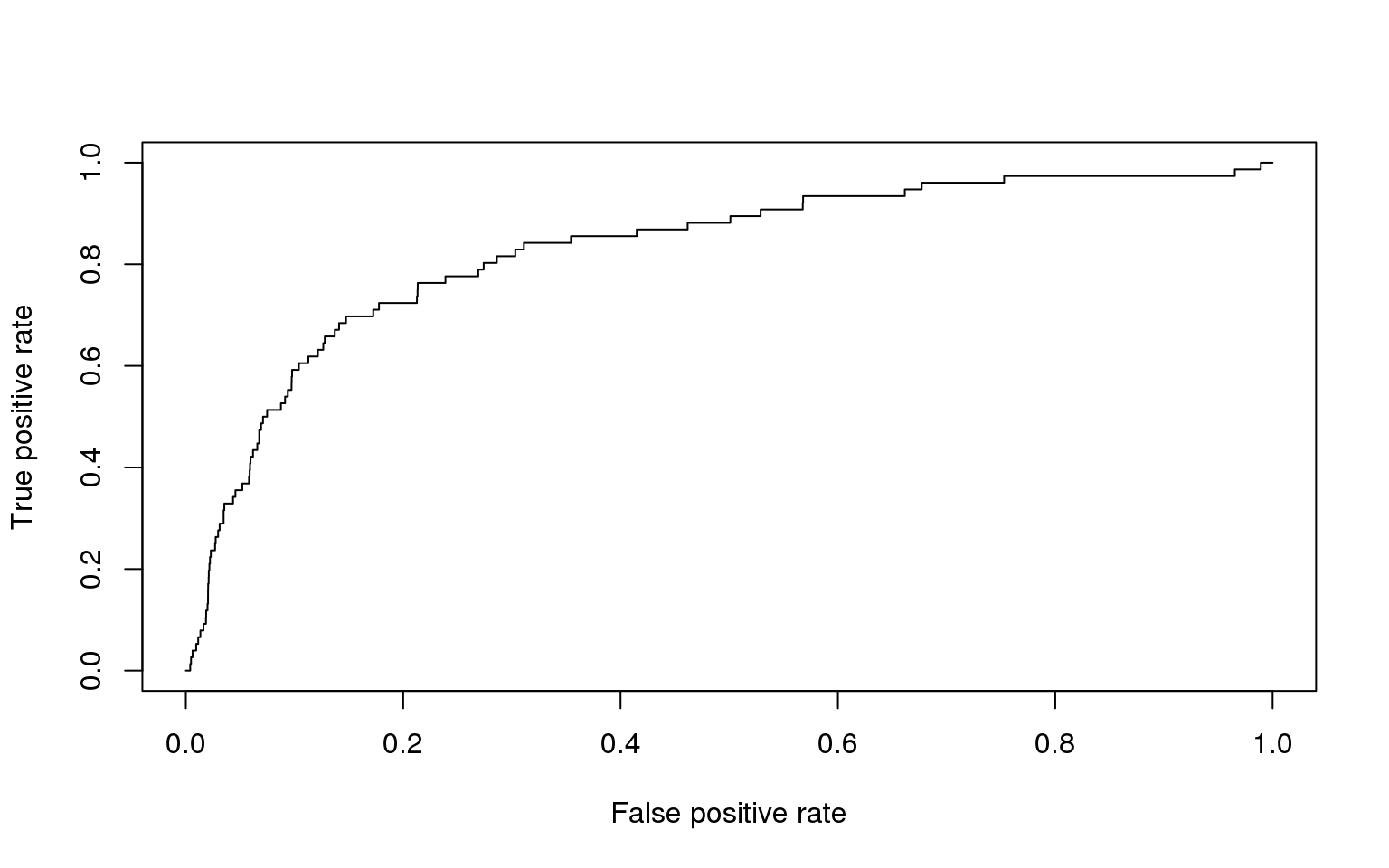

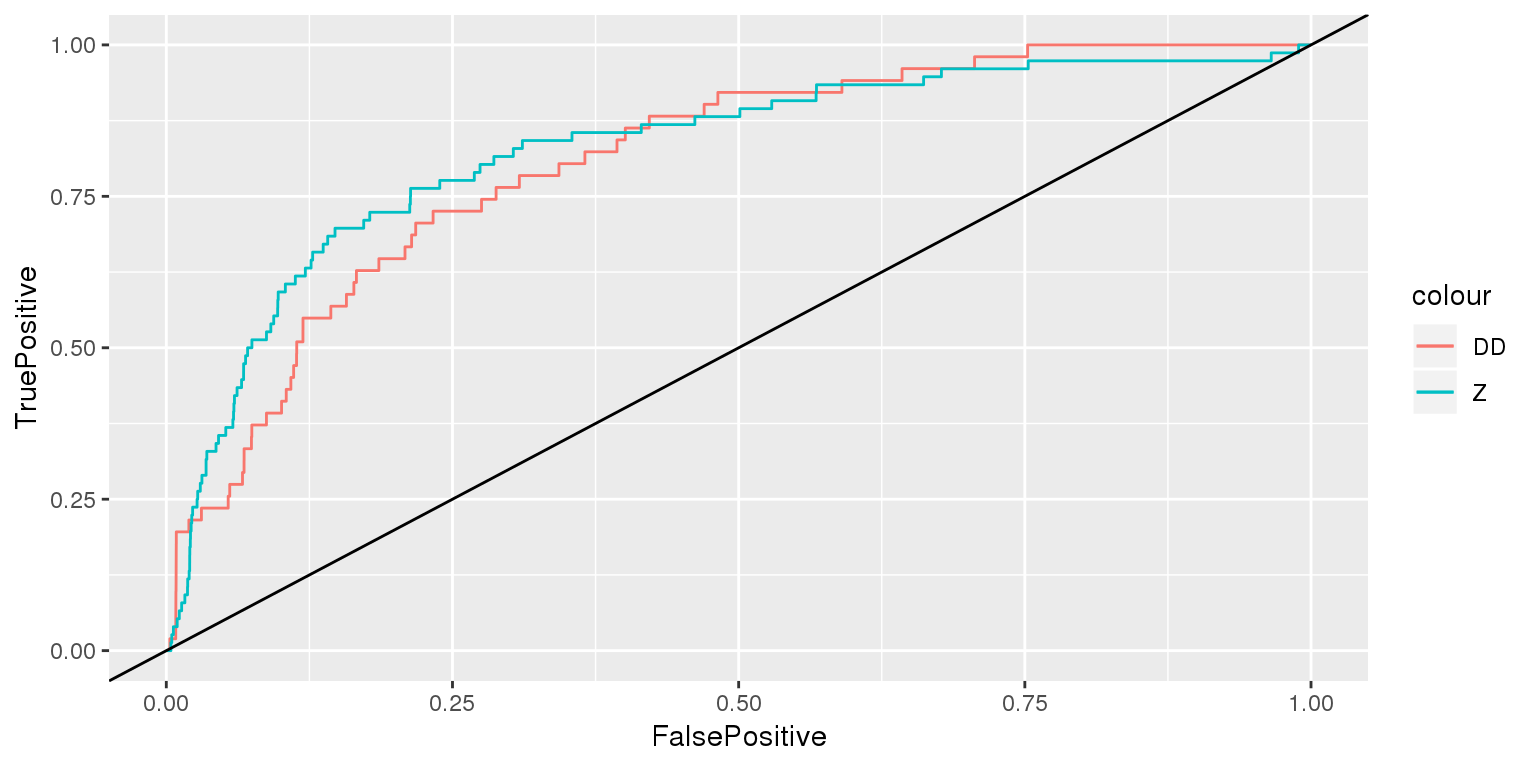

## Number of Fisher Scoring iterations: 9pred_DD <- predict(fit_DD, df, type="response")

ROCpred_DD <- prediction(as.numeric(pred_DD), as.numeric(df$bankrupt))

ROCperf_DD <- performance(ROCpred_DD, 'tpr','fpr')

df_ROC_DD <- data.frame(FalsePositive=c(ROCperf_DD@x.values[[1]]),

TruePositive=c(ROCperf_DD@y.values[[1]]))

ggplot() +

geom_line(data=df_ROC_DD, aes(x=FalsePositive, y=TruePositive, color="DD")) +

geom_line(data=df_ROC_Z, aes(x=FP, y=TP, color="Z")) +

geom_abline(slope=1)

#AUC

auc_DD <- performance(ROCpred_DD, measure = "auc")

AUCs <- c(auc_Z@y.values[[1]], auc_DD@y.values[[1]])

names(AUCs) <- c("Z", "DD")

AUCs## Z DD

## 0.8280943 0.8097803Both measures perform similarly, but Altman Z performs slightly better.

Why?

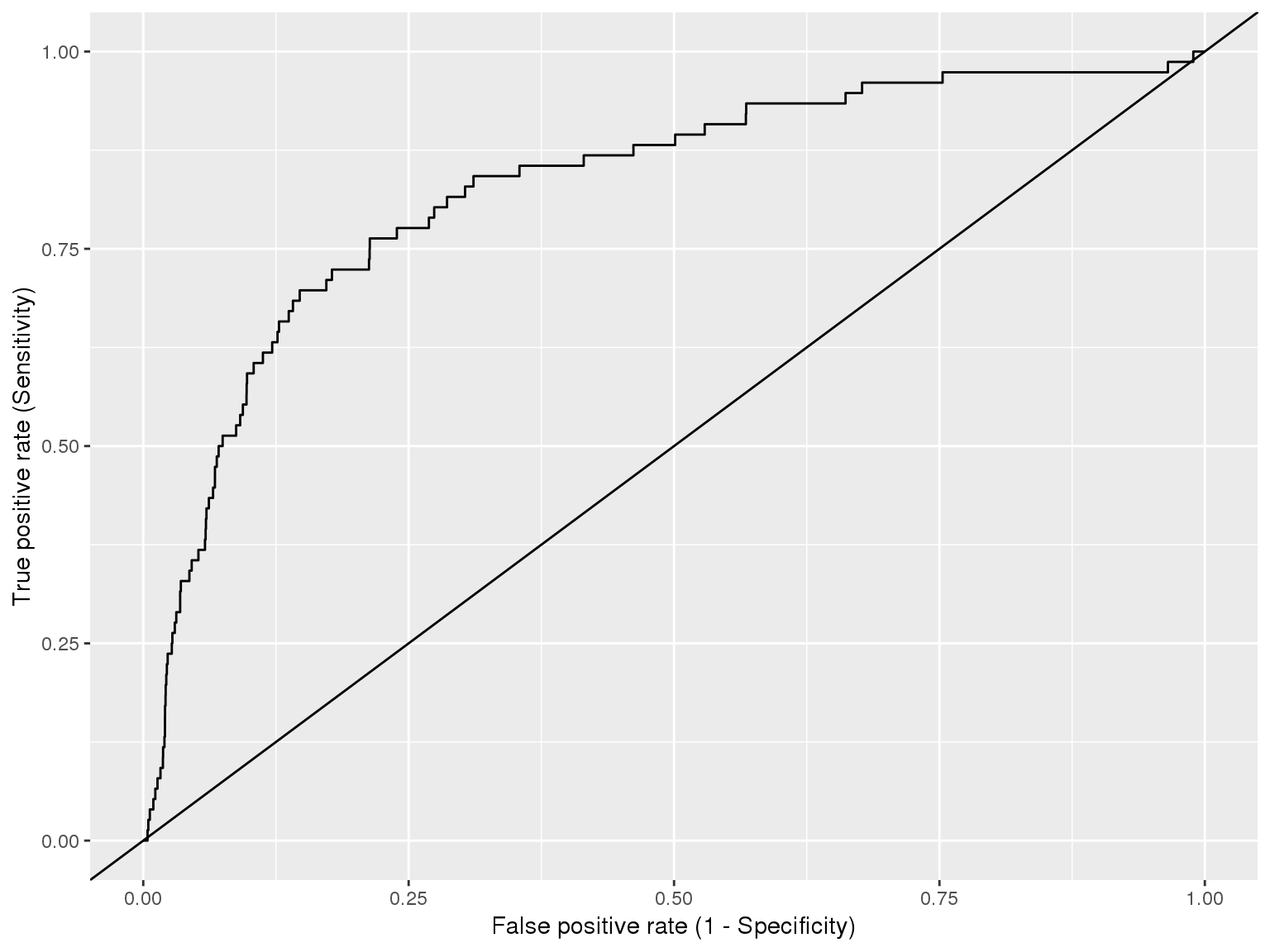

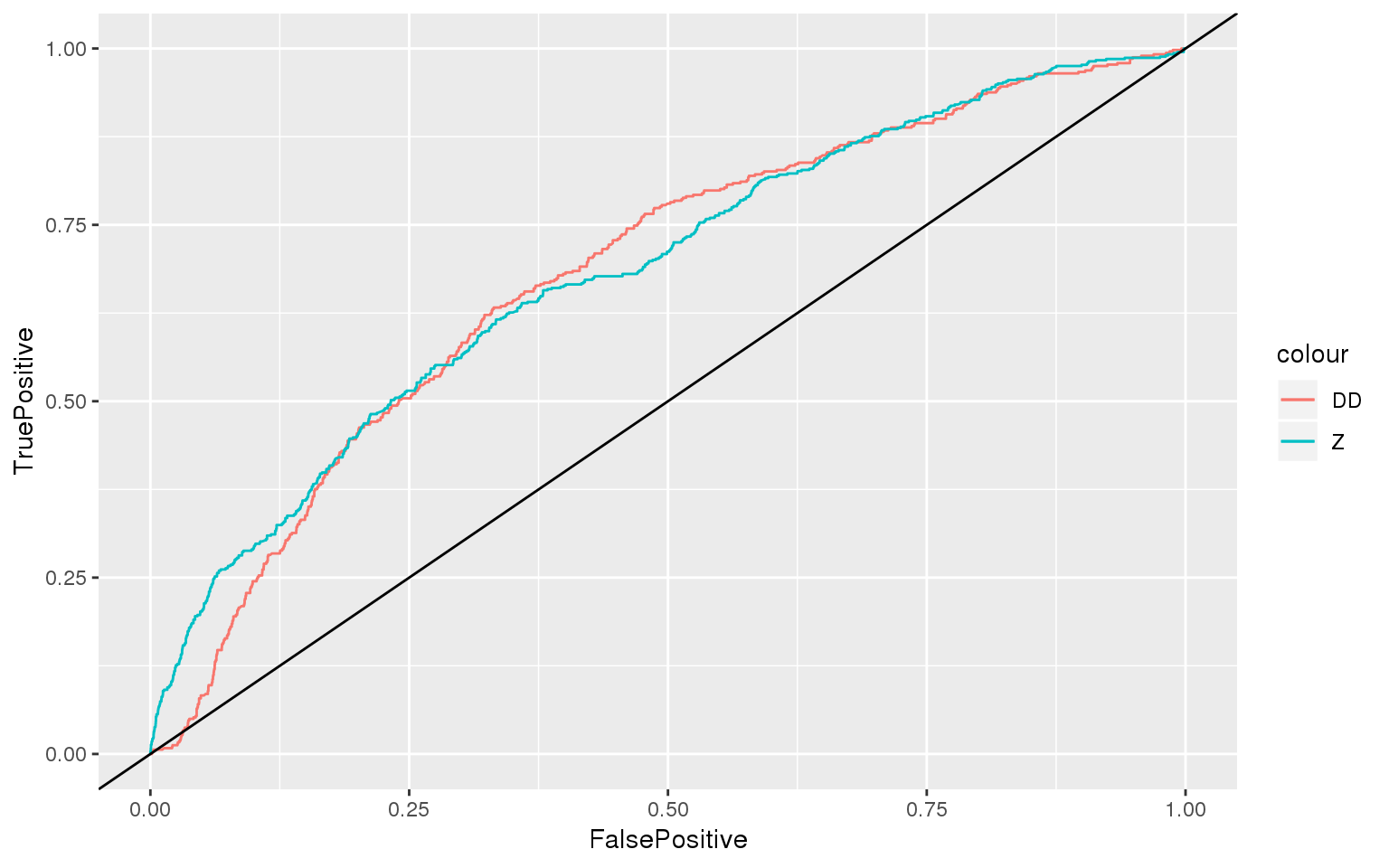

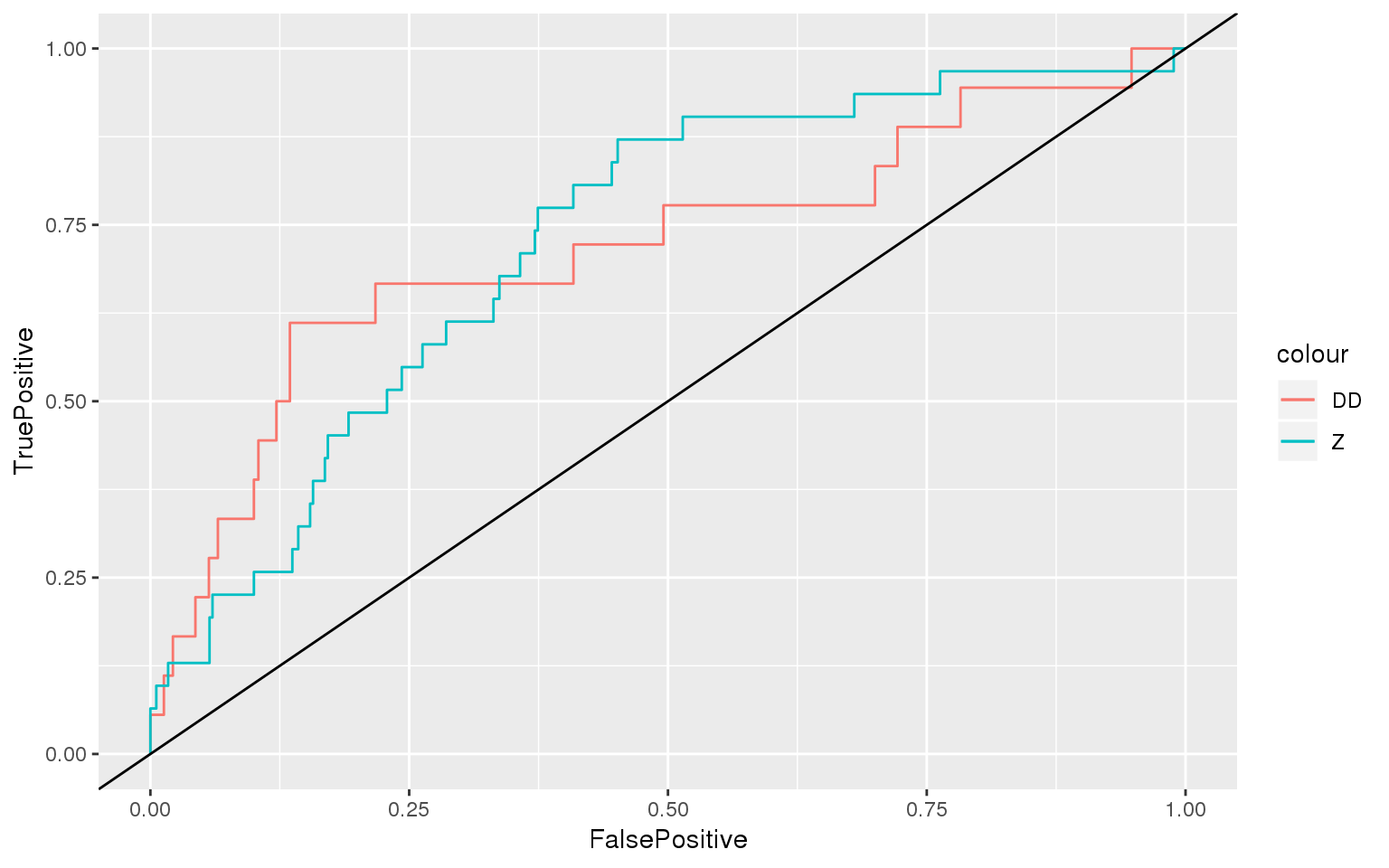

# calculate downgrade

df <- df %>% arrange(gvkey, date) %>% group_by(gvkey) %>% mutate(downgrade = ifelse(rating < lag(rating),1,0))

# training sample

train <- df %>% filter(year < 2015)

test <- df %>% filter(year >= 2015)

# glms

fit_Z2 <- glm(downgrade ~ Z, data=train, family=binomial)## Warning: glm.fit: fitted probabilities numerically 0 or 1 occurredfit_DD2 <- glm(downgrade ~ DD, data=train, family=binomial)summary(fit_Z2)##

## Call:

## glm(formula = downgrade ~ Z, family = binomial, data = train)

##

## Deviance Residuals:

## Min 1Q Median 3Q Max

## -1.1223 -0.5156 -0.4418 -0.3277 6.4638

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -1.10377 0.09288 -11.88 <2e-16 ***

## Z -0.43729 0.03839 -11.39 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 3874.5 on 5795 degrees of freedom

## Residual deviance: 3720.4 on 5794 degrees of freedom

## (47058 observations deleted due to missingness)

## AIC: 3724.4

##

## Number of Fisher Scoring iterations: 6summary(fit_DD2)##

## Call:

## glm(formula = downgrade ~ DD, family = binomial, data = train)

##

## Deviance Residuals:

## Min 1Q Median 3Q Max

## -1.7319 -0.5004 -0.4278 -0.3343 3.0755

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -2.36365 0.05607 -42.15 <2e-16 ***

## DD -0.22224 0.02035 -10.92 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 3115.3 on 4732 degrees of freedom

## Residual deviance: 2982.9 on 4731 degrees of freedom

## (48121 observations deleted due to missingness)

## AIC: 2986.9

##

## Number of Fisher Scoring iterations: 5

## Z DD

## 0.6839086 0.6811973

## Z DD

## 0.7270046 0.7183575What other data could we use to predict corporate bankruptcy as it relates to a company’s supply chain?